“We should program the robots in English” - Judy Savitskaya, April 2022

The need to write code has always been a huge barrier that prevents lab automation from becoming widespread. Historically, biologists weren’t taught programming. As a result, robot manufacturers in life science usually present users with GUI interfaces. Short term, GUIs are a win that allow users to quickly get robots to do simple tasks in a no-code setting. Long term, GUIs shoot lab automation in the foot. Protocols developed in a GUI just can’t be that sophisticated or scalable. This raises a big question: if lab automation is ever going to change how we do biology research, how do we resolve the tension between the GUI vs coding interface?

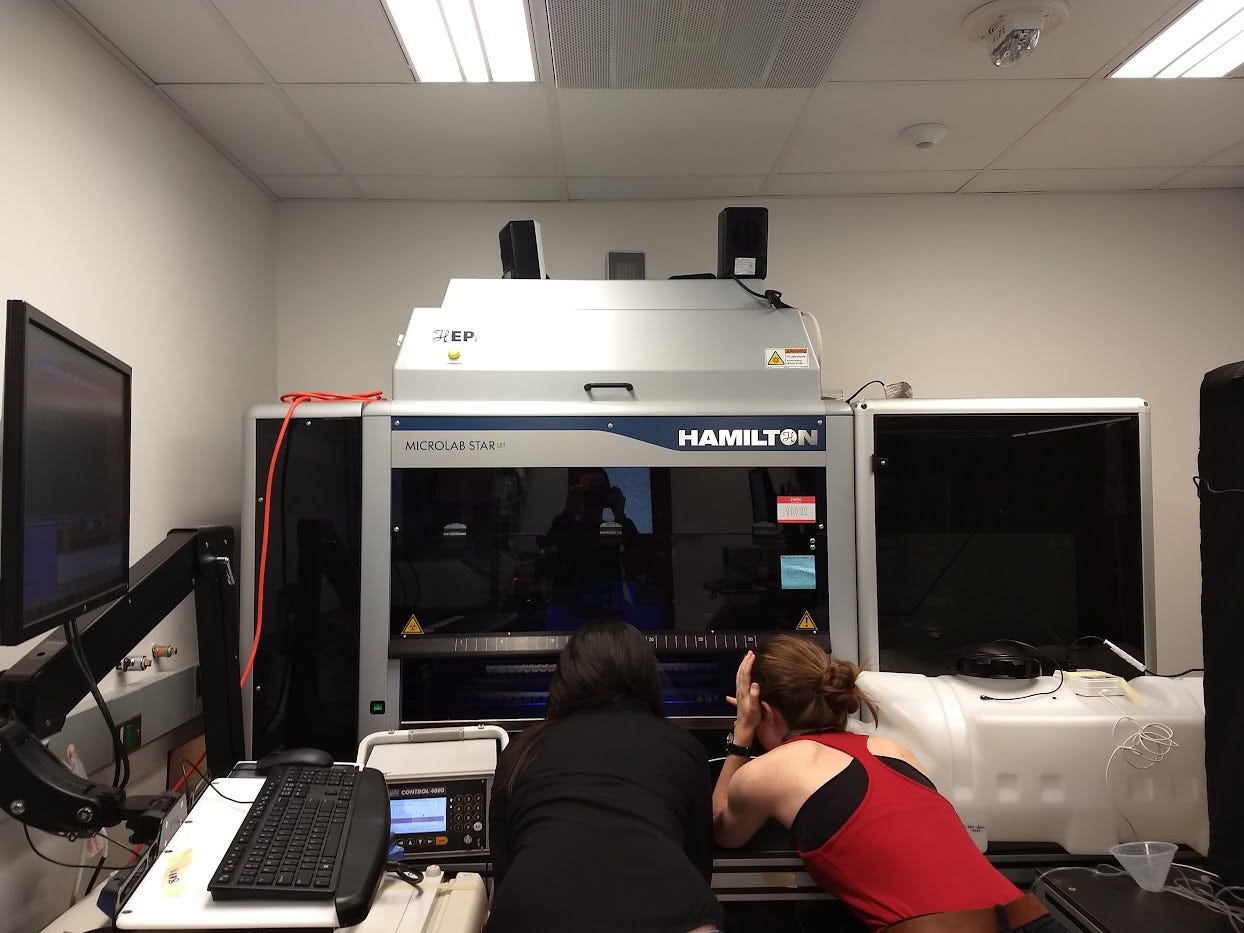

This time last year, in April 2022, it was the early days of the Bioautomation Challenge. The first people to get access to Emerald Cloud Lab were really struggling to get over the learning curve and become productive in the cloud. Fully-automated biology faces the programming-barrier-to-entry on steroids. A lot of first users were learning programming, laboratory robotics, and science all at the same time. The learning curve was steep to the point of being impossible to surmount. I was talking to Judy Savitskaya, a friend and fellow bioengineer about these challenges. She said something that really stuck with me. “We should program the robots in English.”

At the time, “programming the robots in English” seemed like a futuristic idea that was just barely supported by scientific fact — AlphaCode had just come out, but ChatGPT was still six months away. A year later, “programming the robots in English” is an inevitability. I expect that within a couple years, natural language interfaces will resolve the GUI vs coding tension that has plagued lab automation since the beginning.

Unfortunately, it won’t be enough.

Natural language is insufficient to represent biological protocols

The phrase “it works in my hands” is a spectacular and very revealing turn of phrase that I have only ever heard in experimental life science. For people who haven’t heard it, the phrase works like this: at the water cooler, one person might say “gee whiz, I just can’t figure out to get good DNA yield,” and another person might say, “in my hands, I usually get about 20uL of 30-60 ng/uL DNA with your same culture size.” This phrase exists because experienced experimental biologists know that to really specify a protocol you need to describe everything from the culture size to the media to whose literal hands are doing the science. You need to be way, way more specific than you’d think. You need to be way more specific than humans-writing-natural-language can achieve.

Often if you’re trying a new protocol in biology you may need to do it a few times to ‘get it working.’ It’s sort of like cooking: you probably aren’t going to make perfect meringues the first time because everything about your kitchen — the humidity, the dimensions and power of your oven, the exact timing of how long you whipped the egg whites — is a little bit different than the person who wrote the recipe. Really simple protocols may work out of the box: NEB kits are the pre-mixed cake of biology and are designed to be super easy. Protocols from fancy papers are just like recipes from glossy cooking magazines. They look nice, but depending on the writer, they may work better or worse.

The time it takes to fiddle around and get something working is the penalty we pay for the fact that natural language is insufficient to represent biological protocols. Biology as a field is doing its best with a legitimately finicky experimental landscape. We write protocols in natural language, and transferring them to a new researcher usually takes some optimization. It kind of works.

To put it in the lingo of machine learning, natural language biological protocols aren’t zero-shot reproducible. You need to gather data before having a functional protocols life science. A scientist needs to try it a few times and use their observations about it all the way along to rapidly converge upon a working technique “in their hands”.

How big is the “programming robots in English” win?

We’re already starting to see some lab automation software getting hooked up to GPT-4, and there will surely be more to come. What can vs. can’t this give us?

Big wins:

Programming the robots in English will resolve lab automation’s longstanding user interface dilemma. It won’t be worse than a GUI, and will probably be better! Hopefully natural language will have the best of both the GUI and coding worlds.

Better interfaces for lab automation → more widespread adoption!

What it won’t do:

Programming the robot is unfortunately just the first hurdle. Once you have code that runs, you then face the challenge of actually getting the protocol to work. You’re still not going to be able to just point your cloud lab at a DOI and say “replicate these experiments for me” :(

Lab automation requires that things actually work in physical space. Atoms are moved around. For something like a liquid handler, a human is still going to be on the hook for defining the deck heights, selecting and rearranging plate carriers, teaching labware, and optimizing liquid classes. This is something that can be improved by adding more physical sensors, not by adding an LLM.

We still have work to do in terms of determining how to accurately represent biological protocols.

If AI could replicate human biologist skill, would we be satisfied? The answer is no! Humans aren’t very good at experimental biology. If AI just replicates what humans can do, we’re still at the takes-weeks-of-fiddling-to-get-a-protocol-working stage. This is the paradigm we need to bust out of.

Let’s make AI+lab automation better than human scientists

I’ve written before about opportunities to use robots to do experiments that humans could never do. Brute force is a key advantage robots have over humans. What can an AI do that no human scientist can? We need more than just programming the robots in english. These are where the real opportunities are! Here are a few ways that LLMs can have a more meaningful impact on how life science is done:

Read every protocol ever written. No human has the damn time to read all this literature. AI does. The hypothesis here is that instead of attempting to replicate a single protocol in isolation, perhaps more information is to be gained by comparing and contrasting every variant of that protocol ever published. I expect this hypothesis is correct because this is exactly what humans do to troubleshoot when something doesn’t work. TLDR: the big wins will come from combining natural language interfaces for automation with general scientific reasoning agents that can search for and pull in information.

Hand off protocols from humans to robots. The literature is incomplete because it’s only natural language. How do we recapture that missing data? I don’t have time to watch videos of a million graduate students doing minipreps, but AI does. We need to backfill metadata on protocols in order to bootstrap into a world where robots are doing most of the science in a manner that captures metadata. AI can help us get to that first corpus of open-source automated biology methods with multimodal learning.

Know all metadata about every protocol ever executed in a cloud lab. We don’t live in a world where robots are doing most of the experiments in life science. But we will one day. Thinking about all the metadata generated by all the lab automation in the world is something no human has time to do, but AI does. When we get there, we’ll be freed from human-readable natural language descriptions of protocols entirely. Instead of playing catchup trying to remember quite how we did things, we can let AI sort through all the metadata that’s on hand to understand what’s important. That will be the real ChatGPT moment for experimental science.

Want more?

I think a lot about the intersection of LLMs and lab automation as part of Align to Innovate, the nonprofit I founded that hosts the Bioautomation Challenge and several other programs. We’d love to connect with folks who are thinking about this! Email is best, ping contact@alignbio.org. For more essays on automation and synthetic biology, subscribe to my substack below.

Thanks to

Michelle Lee, Carter Allen, TJ Brunette, Judy Savitskaya, Darren Zhu, Sam Rodriques, Pete Kelly, and Dana Gretton.

"It works in my hands" is like "it works on my machine" in software

Thanks for the writeup! What's an example of a general scientific reasoning agent? If you have a protocol for creating e.g. a strand of circular RNA, the agent searches through published protocols and understands variations, and then what does it do with the variations? Just tries them all?